Migrating V4 Imports to ETL Executions

If you have consulted the Profile tables data sync use case some time ago, you've probably implemented Step 4 (mass importing profiles in a CSV file) with the following deprecatedv4 operation:

POST /mass-imports/v4/entity/MyEntity/table/MyTable/import

Thanks to ETLs and the v5 API, there are 2 new more advanced ways to push your data file directly through an API call:

- ETL executions triggered by API, where you push the data file with a predefined import definition

- one shot ETL executions, where you push the data file and the import definition at once

File imports are usually recurring, with a pattern where you'll make the same calls with the same parameters, because you are feeding the same table frequently, with the same mapping and import mode.

We therefore believe that in most cases, the newer 'ETL executions triggered by API' explained below is the most suitable option.

Both these options gives you access to all the advantages of ETLs, such as data transformation or retrieving the file from a remote server.

But they still let you push the file directly in the body of the call and can work exactly like the v4 bulk import, only with additional options!

As announced in our changelogs and maintenances pages, the deprecated v4 imports will be removed by the end of June 2025.

We invite you to update your calls with one of the 2 alternatives.

Why choose ETL executions triggered by API?

With ETL executions triggered by API, you need to set up a fixed ETL definition beforehand, so you don't have to push the imports parameters every time.

For instance, if you are regularly importing orders data from your physical stores, the data file will always be pushed into your "Orders" interaction tables, with the same mapping because it contains the same columns. You can identify this import as a recurring one, with a fixed definition, and only push the data file itself.

Of course, you can have several recurring imports: one into your "Profiles" table, one into your "Orders" tables, maybe even an "Order lines" table, etc. While you can set up an ETL for each of these cases, executions triggered by API allow you to set up a multifiles process, to push all files at once and guarantee that they are imported in the correct sequence.

In addition, setting up the definition in advance allow marketeers to visualize the existing active data flows in the UI. This lets them know that recurring flows are in place and to visualize the definition of active synchronizations that are only awaiting a data file pushed by API.

Setting up an ETL execution triggered by API

If you do not need to push the import definition every time because you have identified a recurring pattern in your API calls, you should proceed in 2 steps:

- Create an automated ETL with a fixed definition

- Trigger it through an API call

Creating an automated ETL

This is one time operation done through the following operation:

POST /mass-imports/v5/entities/MyEntity/etls

with the TRIGGERED type and the API type in the "triggering" object.

{

"type": "TRIGGERED",

"name": "lead-generation-automated-integration",

"description": "The integration of generated lead all along the day",

"triggering": {

"type": "API",

"paused": false

},

"reportRecipients": [

"john.smith@actito.com"

],

"fileTransfer": {

"input": {

"files": [

{

"fileCode": "leads",

"fileNamePattern": "leads_$YYYYMMDD.csv",

"compressionType": "ZIP",

"compressedFileNamePattern": "leadgeneration_$YYYYMMDD.zip"

}

]

},

"output": {

"location": {

"type": "REMOTE",

"remoteLocationId": "1"

}

}

},

"inputFilesProperties": [

{

"fileCode": "leads",

"csvFormat": {

"encoding": "UTF-8",

"separator": ";",

"enclosing": "\""

}

}

],

"dataTransformations": [

{

"fileCode": "leads",

"transformations": [

{

"header": "acquisitionMoment",

"transformation": {

"type": "DATE_FORMAT",

"format": "MM-dd-yyyy HH:mm:ss"

}

}

]

}

],

"dataLoadings": [

{

"fileCode": "leads",

"destination": {

"type": "PROFILE_TABLE",

"id": "1",

"attributesMapping": [

{

"header": "eMail",

"attributeName": "emailAddress",

"ignoreEmptyValues": false,

"ignoreInvalidValues": false,

"ignoreValuesWhenAlreadyKnown": false,

"mergeValuesWhenMultivalued": false

}

]

},

"parameters": {

"mode": "CREATE_OR_UPDATE",

"generateResultFiles": false,

"generateErrorFiles": true

}

}

]

}

The example above is a single file ETL, but this option also allows you to process multiple files at once (this is where the "fileCodes" and "fileNamePattern" parameters matter). You can find an example of multifiles ETL here

Triggering an ETL execution by API

Once the ETL definition has been created, files matching the parameters can be pushed through the following operation:

POST mass-imports/v5/entities/MyEntity/etls/MyEtlId/trigger-execution

While this call can be used to trigger the retrieval of a file from a FTP location, here we are mainly focusing on pushing the file in the body of the API call, as a multipart/form-date body schema.

Here is an example of call to directly push the source file by API.

curl --location --request POST 'https://api3.actito.com/mass-imports/v5/entities/MyEntity/etls/123456/trigger-execution' \

--header 'Authorization: Bearer eyJ0eXAiOiJKV1QiLCJhbGciOiJSUzI1NiJJJJJJJJJJJJJJJJJJJJJJJJJ' \

--form 'inputFile=@\yourfolder\ordergeneration_20241029.zip'

The fileNamePattern in the definition of the ETL is only relevant if you set up a "multifiles" ETL.

In the case of a "monofile" ETL, there is no validation on the name of the file.

When to choose one shot ETL executions?

The format of one shot ETL executions is similar to the v4 imports, in the sense that you need to push 2 files in your API call:

- a file containing the data

- a file containing the parameters of the import, namely the definition of the ETL

One shot ETL executions are mainly useful if there is a need to provide this definition with each call.

This can be the case because it changes frequently and some parameters are variables from your upstream processes (for example: your data is coming from many different partners and the report recipient must change according to the source).

They are also useful for one-off operations that won't be automated, such as data initialization at set-up.

File imports are rarely true "one shots". They are usually recurring, with a pattern where you'll make the same calls with the same parameters, because you are feeding the same table frequently, with the same mapping and import mode.

We therefore believe that in most cases, the newer 'ETL executions triggered by API' explained above is the most suitable option.

Setting up a one shot ETL execution

If you prefer to push the definition of the import in every API call, you should use the one shot ETL v5 operation:

POST /mass-imports/v5/entities/MyEntity/etl-executions

In the specs, it is referred to as ONESHOT standalone ETL execution with provided input file.

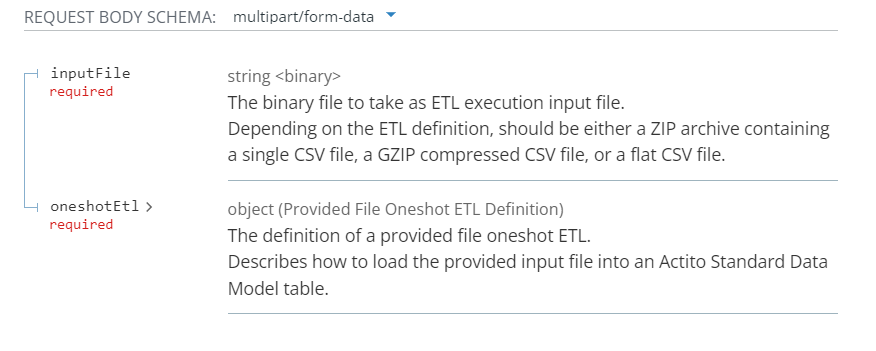

Change the "Request body schema" to multipart/form-data in the dropdown to see the relevant specifications.

Just like the bulk import, it uses a multipart/form-data content type to provide 2 files:

-

the CSV file containing the data: an

application/ziportext/csvbody part called "inputFile". The format of the file remains identical to the v4 bulk import call, with the only difference being that the file is not required to be zipped (though it's recommended). -

the JSON file containing the definition of the import: an

application/jsonbody part called "oneshotEtl". It replaces the "parameters" file from the v4 bulk import call and has a slightly different structure.

Structuring the 'oneshotEtl' JSON file

Two examples of the "oneshotEtl" files are available in the payload request sample of the specs (after selecting the multipart/form-data content type).

Let's review the more comprehensive one:

{

"fileTransfer": {

"input": {

"file": {

"compressionType": "NONE"

}

},

"output": {

"location": {

"type": "TRANSFERBOX",

"entity": "MyEntity"

}

}

},

"inputFileProperties": {

"csvFormat": {

"separator": ","

}

},

"dataLoading": {

"destination": {

"type": "PROFILE_TABLE",

"id": "123",

"attributesMapping": [

{

"header": "email",

"attributeName": "emailAddress"

},

{

"header": "last_name",

"attributeName": "lastName"

},

{

"header": "first_name",

"attributeName": "firstName"

}

]

},

"parameters": {

"mode": "CREATE_OR_UPDATE",

"generateErrorFiles": true,

"generateResultFiles": true

}

},

"reportRecipients": [

"john.smith@actito.com"

]

}

- "fileTransfer": this parameter contains additional information not required in the v4 call.

- "input": used to specify the compression type of the file (as .zip is not mandatory).

- "ouput": the result and error files can be retrieved through dedicated calls (like for the v4 call), or automatically dropped on a file transfer location.

- "inputFileProperties": equivalent to the "format" and "encoding" parameters of the v4 call.

- "dataLoading":

- "destination": defines the "id" and "type" of the table in which the data is imported (this information was passed in the path of the v4 call) and the "attributesMapping" in a similar format to the v4 call ("headerColumn" is now called "header").

- "parameters": defines the mode of the import and whether output files must be generated (like the "mode" and "generateResultFile" of v4 call).

- "dataTransformation": the entirely new parameter (compared to the v4 call) allows to apply transformation to the raw data before it is imported into the table.

- "reportRecipients": defines the e-mail addresses that will receive the execution report, like the parameter of the same name in the v4 call.

Now that you know how to translate the format of your bulk import calls into the new version, you're ready to benefit from the additional features available through one shot ETLs!